3D Camera Projection in Nuke

- joshknatt

- Feb 18, 2022

- 3 min read

Following last weeks look at deeper colour correction and 2D tracking & Lens Distortion, we are now ready to prepare a nuke sequence to create a 3D Camera Projection ready for working in Maya to add CG elements to a scene.

Setting up the scene for 3D Projection

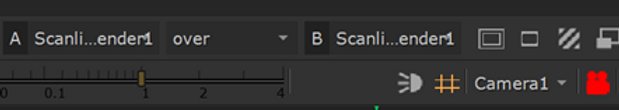

To set up a scene in Nuke that will properly export 3D camera data for Maya is a complex process. Initially there is a need to bring in undistorted footage using the same workflow from last week. Once this has been done, you need to bring in some Scanline render nodes over your undistorted footage. This ensures that camera is locked in place ready for the introduction and editing of geometry in Nuke.

There is then a need to bring in a camera tracker to track the point of camera movement – this should be done after the initial stabilisation of the camera, which we looked at in week one.

Following this, you can then use a grid on a virtual camera to set up the scene with depth perception of the scene to ensure that the scene is set up for the introduction of the 3D Camera Projection.

Bringing in the 3D Camera Projection

After you have set the scanline’s, camera tracker and grid for the scenes depth, you can then bring in the 3D camera system along with a Project 3D node. It is really important, due to the shape of this node block, that you organise it to keep it clean in the workflow, otherwise looking at where pipes are going to and from, can become somewhat confusing and can result in the workflow not being output and rendered correctly.

Introducing 3D Assets pre-Maya

Nuke provides the option to bring in some 3D Assets before you output the files to Maya, this is important as you can do a range of setup for things such as ground planes before you bring your CG assets into Maya. The first of these is setting some of the points from the camera tracker to being “Ground Points” this is useful as it allows you to predetermine where the ground is within the scene.

The second of these are grids that can be brought in to identify areas in a scene that you may want to cast shadows and reflections. You also have to ability to bring in specific 3D assets early on (such as a sphere) that can also be rendered into the scene and can that have Arnold surfaces put on them in Maya to allow for things such as reflections.

This has been a slightly shorter post this week, however next week we’ll be looking at how to create an HDR image before we head into Maya to edit our 3D assets.

Further Research and Reading

This week, I wanted to look at how to refine the camera tracking point clouds in Nuke when setting up the 3D camera projection and found this YouTube video https://www.youtube.com/watch?v=U0GKrbjZQfE

In it, a series of additional information was given about how to refine the point clouds created in the 3D camera tracker before you render out your footage for Maya. This was interesting as it went through how to increase the number of points in the point cloud and how to refine and shift the error variable to ensure that more points of the point cloud are more accurate and render out into Maya.

Комментарии